The Moral Energy Case for Large Language Models

Destroying the planet one batch at a time

Large language models are getting larger and larger, funded by the seemingly infinite pockets of the internet giants. Apocalyptic predictions declaring that because “Money is all you need”, that we are witnessing a slow but inevitable race to destroy the environment.

The earth has a finite amount of resources and it’s rational to be careful about how we consume them given that we don’t really have that many alternatives. If you’re bullish about Mars then I’d encourage you to live in Death Valley for a year and let me know how it goes in the comments section.

So will large language models destroy the planet with their energy requirements?

Basics

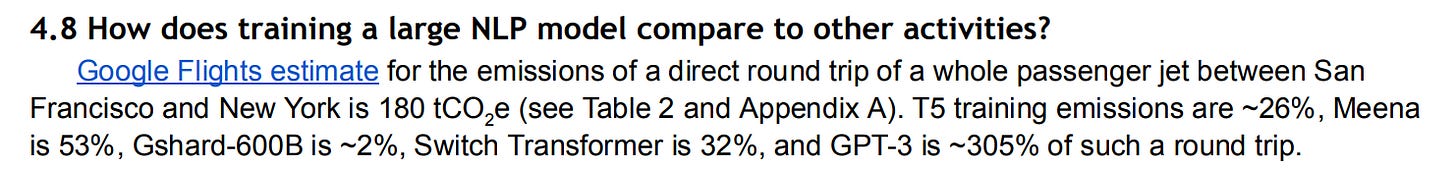

From the brilliant paper by Google AI

Pre-COVID we had about 40M flights per year and we had maybe at most a dozen models similar in scale to Switch trained.

So in absolute terms it feels like this isn’t the highest leverage area to improve on but even in relative terms I’d like to make the case that

Large language models are incredibly energy efficient and their use of energy is more than morally justifiable

The Virtues of Saving

If you’ve spent a weekend at any large hotel chain, you’ve surely seen their note on reusing your towel so you can save the environment.

There are already enough incentives in the world for anyone to save money so there’s no virtue in signaling that you also care about the environment. It’s only virtuous if there’s a need for self sacrifice.

Energy efficient language models are about saving money not the environment

Some companies have resorted to “parameter count porn” where they declare having scaled ever larger models in an attempt to outclass their competitors. This is an easy to execute on marketing strategy today but given the balance sheet constraints will likely stop soon enough.

Justifiable Energy

The simplest way to reduce the energy footprint of large language models is to stop training large language models.

At the end of the day, if you don’t believe that large language models are valuable then you won’t spend time, money and energy to train them. The challenge is then to justify why others are ready to spend mind boggling amounts of money on them. People essentially vote with their electricity bill on what they deem to be valuable.

As a thought experiment, write down a list of all the ways in which you consume energy either directly or indirectly and rank them by their importance to you. Now ask your friend or spouse to do the same and compare lists, do they look the same?

A more extreme version of the above experiment is to choose a few uses of energy that you think are completely useless, do you think they should be banned? I’m bald and don’t need a hairdryer and even though the total amount of hairdryers in the world consume a lot of energy, I don’t worry too much about it.

Increasing Energy per capita is a good measure of increasing quality of life.

I hope this exercise makes it clear how difficult it is to make such stack ranks of the importance of different uses of energy and how quickly the exercise can turn dystopian

People vote with their electricity bill

One way to avoid such an exercise is to create an abundance of renewable energy so that everyone’s needs can be satisfied. This is something that the nuclear crowd understands.

Locality

Energy is usually consumed close to where it is produced

Large language models are an exception to this, you can be sipping coffee at Blue Bottle while a multi million dollar job is being kicked off in Antarctica with a data center powered by leaked natural gases that would otherwise negatively affect the environment.

Alternatively you could kick off a job to a datacenter co-located with a Nuclear power plant which are today’s most efficient way at creating large amounts of energy. Nuclear power plants have failed or are perceived to have failed as an experiment for 3 reasons:

Politically unacceptable - aka: Chernobyl

Large initial investment required - needs governments or companies focused on the long term

No energy supply elasticity - people don’t consume energy when sleeping and you can’t turn off reactors

Large models can be trained in data-centers powered in remote areas to solve 1 and can address 2-3 by being a baseline bidder for nuclear energy while it would otherwise be wasted during low demand hours.

It’s unlikely we’ll have a future where each academic trains their own language models from a few solar panels on their windows, what’s more likely is that the energy production will be centralized and or subsidized by the government.

Large language model training will be democratized if energy production becomes cheaper not if energy consumption ceases to exist.

Centralization vs Decentralization

My speculation is that more scrutiny is being directed at the energy costs of large models is because they invalidate a large chunk of academic research. So the argument isn’t really about large models consuming too much energy but about them not having the right to consume it at all.

However, Language Models are shockingly cost efficient when you take into account that they are

Pre-trained in an unsupervised way on internet data

Used for downstream tasks across languages, modalities, teams and companies

Checkpoint-able so that no batch is ever wasted

Centralization is cheap and Decentralization is expensive. What you choose depends more on a moral tradeoff between control and costs. As of today, we can’t have cheap state of the art language models without relying on large companies.

A former colleague has described working with large language models to be an alienating experience where all improvements are led by a central team. It’s an efficient setup for groups but demotivating for an individual. It also makes tackling bias issues much more difficult since you have to rely on a third party to deal with them as opposed to just solving them yourself.

Models are not scale invariant, you can’t take a sample of a bunch of smaller models and make claims about larger ones. You can’t decentralize away the Large Hadron Collider by funding many macro scale experiments.

Governments and ML funding

Understandably many researchers feel distraught that they don’t have the same kinds of compute resources that large industry labs have. So in what seems to be a logical extension of the NSF grant program, shouldn’t governments provide more funding to ML researchers to make sure that the public still benefits from advancements in AI?

If we’re optimizing for environmental impact then the answer is a resounding no. Offering compute resources for “free” effectively destroys all incentives to make models efficient.

More importantly, there’s already an incentive to make models cheaper - the company balance sheet. Large companies are spending millions of dollars on larger models because it’s materially useful to their businesses especially if you consider that they’re an excellent layer on which you can then build search or ranking models.

Projecting exponential distributions to infinity is not useful

The Cloud Money Printer

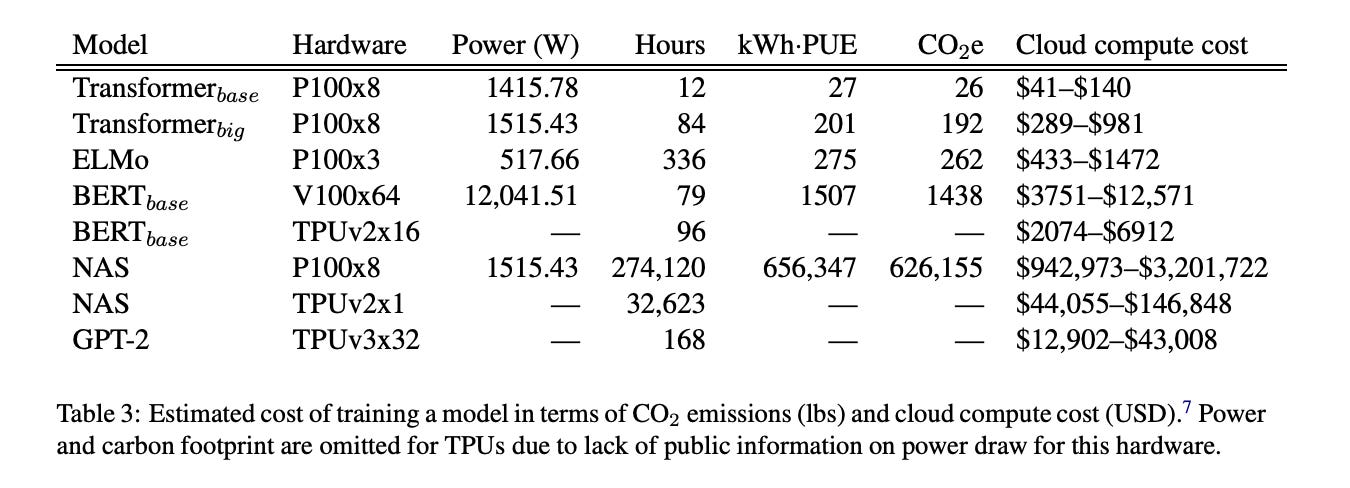

The cost to train GPT-2 is estimated at the upper end at $43K which at first is a shockingly large number if you’re a Machine Learning hobbyist. Until you consider that AWS for example made about $13B in profit in 2020. So they could hypothetically train GPT-2 over 200K times but they won’t because they know that the opportunity cost doesn’t justify it. And even if they did the total energy consumption would still be dwarfed by the airline industry.

If you’re a hobbyist your models are likely spending more time in training rather than inference. But if you’re a large company, you need lots of inferences to recoup your initial investment (that’s why 16 bit precision and quantized models are such a big deal for larger companies).

So if you’re an accountant for a larger company and notice that the ML team is spending more time on training than inference you should have a chat.

Imagine NVIDIA, purposefully slowing down GPUs so you’d buy more of them. They would be displaced by a competitor very quickly.

If you remember one thing from this article, let it be:

Smaller models, more efficient data centers and hardware all already have strong economic incentives

Epilogue

I hope this article helps you reason through the subtleties in talking about the energy use and CO2 emissions of large models. How images like the below comparing a language model that can be retrained by thousands of engineers with inferences consumed by millions of consumers to a car are disingenuous

Nobody is going to start burning coal in the middle of Palo Alto to train GPT-4

Acknowledgements

Thank you to DataFox, Ainur Smagulova, James Giammona and the Robot Overlord community for helpful feedback.

There are other significant risks and consequences than environmental impacts induced by those large language models uncovered by the former Google ethical AI team lead in a paper published EARLIER than this brilliant one by Google AI which did not even cite:

https://www.technologyreview.com/2020/12/04/1013294/google-ai-ethics-research-paper-forced-out-timnit-gebru